Introduction

The job market is evolving at an unprecedented pace, making it essential for recruitment teams to move beyond conventional sourcing approaches. As organizations expand globally, HR managers and talent acquisition specialists require timely, accurate, and well-structured job market data to make strategic hiring decisions. Automating the process to Scrape Google Jobs Listings directly from search results enables recruiters to uncover valuable insights into hiring trends, competitor recruitment strategies, and market salary ranges.

By adopting a Python Script to Scrape Job Listings, recruitment analysts can efficiently track, extract, and process data from Google’s job postings section, converting scattered information into valuable insights. This detailed guide outlines each stage — from environment setup to creating a structured dataset — ensuring seamless integration into your HR analytics framework for more strategic and informed decision-making.

Understanding the Benefits of Automated Job Data Collection

Manually collecting job postings can be tedious and prone to human errors, often resulting in incomplete or outdated information. By integrating Automated Job Data Collection and Job Board Scraping With Python, recruitment teams can significantly accelerate data gathering, ensuring accuracy and relevance while freeing up valuable time to focus on strategic hiring decisions instead of repetitive administrative tasks.

Key Advantages of Automated Job Data Collection:

- Accurate market mapping: Automated systems can continuously scan multiple job boards, company career pages, and professional networks to quickly identify skills, job roles, and salary patterns that are currently in demand. This enables recruiters to maintain a clear understanding of the evolving labor market and adjust hiring strategies accordingly.

- Real-time tracking: With automation, job posting data is updated continuously, allowing you to capture the frequency of postings and detect signs of hiring urgency. This real-time insight helps recruiters prioritize outreach efforts and engage with candidates at the optimal time.

- Competitive benchmarking: Automated tools can monitor and analyze competitors’ recruitment activities, such as the roles they are hiring for, the salary ranges offered, and location preferences. This intelligence helps organizations benchmark their hiring practices and maintain a competitive edge in attracting top talent.

- Data integration: The collected job data can be seamlessly fed into recruitment dashboards, applicant tracking systems, or business intelligence tools. This integration ensures that insights are instantly accessible and can be used to drive informed hiring decisions without additional manual processing.

In conclusion, adopting Automated Job Data Collection not only eliminates repetitive manual work but also builds a scalable, reliable, and continuous flow of recruitment intelligence, helping businesses respond swiftly to changing hiring trends and competitive pressures.

Step-by-Step Guide to Building Your Job Scraper

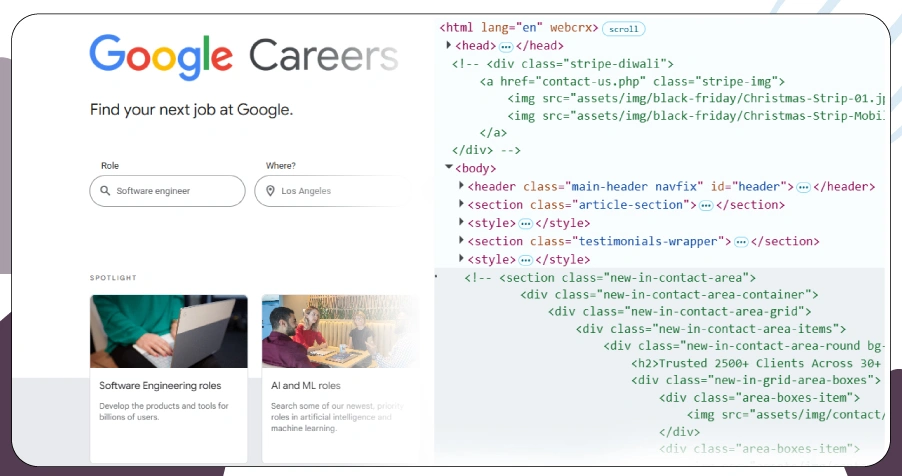

Google’s job search functionality compiles listings from various job boards and corporate career pages. Although there’s no free official API available, it’s still possible to Scrape Google Jobs Listings by implementing structured HTML parsing and precise request management.

Setting Up the Python Environment

Before beginning, make sure your system is prepared for automation tasks. Install Python 3.10+ along with the essential libraries below:

pip install requests beautifulsoup4 pandas lxmlThese libraries enable you to send requests to the Google search results page, parse the HTML structure, and transform the extracted data into an organized, tabular format for analysis.

Step 1: Sending the Request

The first stage focuses on constructing an accurate search URL tailored to your target keyword and specific location. When using a Google Jobs API Alternative, it is essential to replicate the behavior of a real browser request to prevent detection and maintain consistency in the HTML structure returned by Google.

Leveraging the requests library combined with carefully set headers ensures authenticity and delivers results similar to those viewed by standard users.

import requests

from bs4 import BeautifulSoup

def fetch_google_job_results(job_title, city):

base_url = f"https://www.google.com/search?q={job_title}+jobs+in+{city}&ibp=htl;jobs"

request_headers = {

"User-Agent": "Mozilla/5.0"

}

job_page = requests.get(base_url, headers=request_headers)

return job_page.text

# Example call

page_source = fetch_google_job_results("Python Developer", "New York")

In this step, the script sends an HTTP request that mimics a real browser visit, ensuring that the HTML content provided by Google is identical to what an actual user would see.

Step 2: Parsing the HTML

After successfully retrieving the HTML content, the next step is to process it using BeautifulSoup for structured data extraction. This library allows you to navigate through the HTML elements and precisely identify job listing components for further analysis.

from bs4 import BeautifulSoup

# Initialize BeautifulSoup with the retrieved HTML content

soup = BeautifulSoup(html_content, "lxml")

# Store parsed job data

jobs = []

# Loop through each job listing container

for card in soup.select(".BjJfJf.PUpOsf"):

title_elem = card.select_one(".BjJfJf.PUpOsf span")

company_elem = card.select_one(".vNEEBe")

location_elem = card.select_one(".Qk80Jf")

jobs.append({

"title": title_elem.get_text(strip=True) if title_elem else None,

"company": company_elem.get_text(strip=True) if company_elem else None,

"location": location_elem.get_text(strip=True) if location_elem else None

})

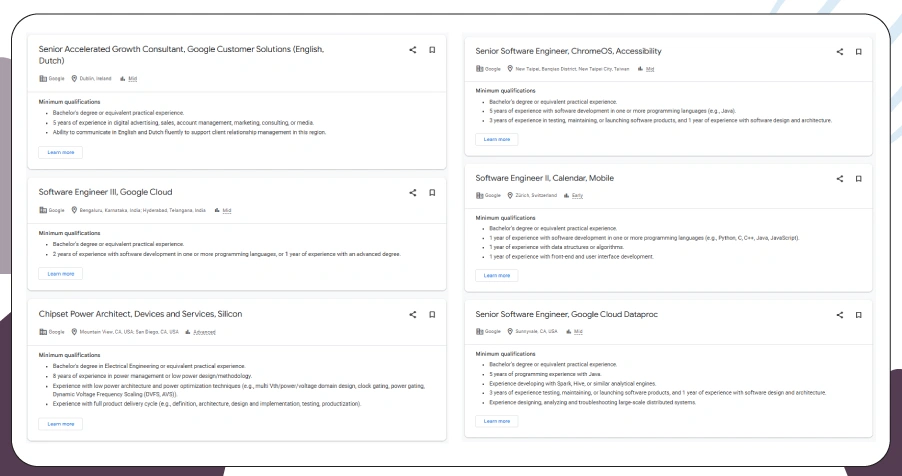

This process generates a structured list containing job titles, company names, and locations, which serve as the primary data points when you aim to Extract Job Data From Google for recruitment insights and analytics.

Step 3: Structuring Data

Creating a well-Structured Job Listing Dataset is essential to ensure that the collected information can be seamlessly processed, stored, and utilized for analysis.

import pandas as pd

# Convert the extracted job records into a DataFrame

job_data_frame = pd.DataFrame(jobs)

# Save the structured dataset as a CSV file without the index

job_data_frame.to_csv("google_jobs.csv", index=False)

Once the dataset is exported, HR analysts can easily integrate it into analytical platforms such as Power BI, Tableau, or in-house reporting dashboards to perform deeper recruitment trend analysis and data-driven HR decision-making.

Advanced Techniques for Deeper Job Insights

When moving beyond basic scraping, your solution can evolve into a Real-Time Job Posting Scraper 2025 that continuously collects updated data at predefined intervals. This approach eliminates manual data gathering and enables uninterrupted tracking of hiring patterns across industries, locations, and periods.

By integrating advanced functionalities, you can transform your script into a powerful Google job search resource that delivers deeper recruitment intelligence.

Key capabilities include:

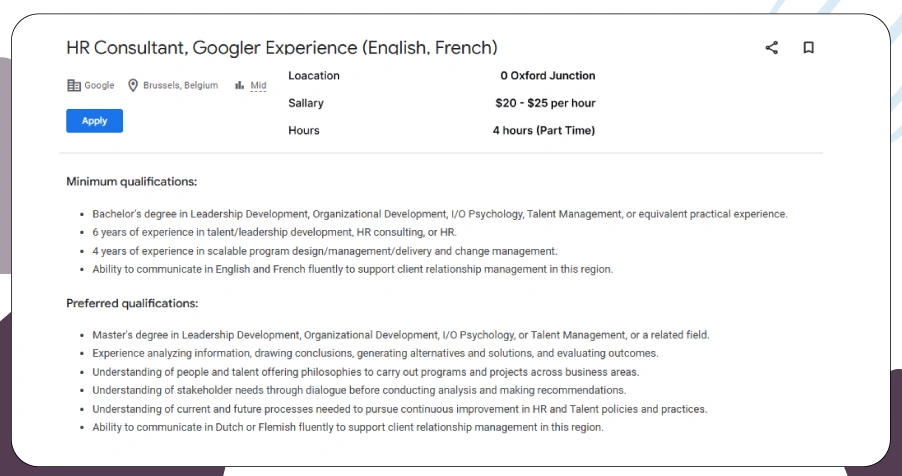

1. Salary Parsing for Compensation Benchmarking

If salary ranges are present in listings, the scraper can automatically extract and standardize this information. This data can then feed into compensation benchmarking models, enabling HR teams and recruiters to compare pay structures across companies, roles, and regions. It helps in identifying competitive salary packages and spotting wage trends over time.

2. Skill Extraction Through Job Description Analysis

By parsing job descriptions, your scraper can identify specific skills and qualifications in demand. This insight allows organizations to adjust training programs, recruitment strategies, and workforce planning to align with evolving industry skill requirements. It also helps job seekers and consultants understand skill gaps in the market.

3. Date Tracking to Measure Hiring Velocity

Capturing the posting and closing dates of job advertisements allows you to calculate the time-to-fill for various roles. This metric is valuable for workforce planning, indicating how quickly positions are being filled and highlighting areas with talent shortages or surpluses.

4. Cross-Referencing Across Industries for Market Comparison

By collecting and analyzing postings from multiple sectors, you can perform cross-industry comparisons to identify competitive hiring trends. This includes spotting industries with rising demand, understanding workforce mobility, and detecting emerging job categories.

Ethical and Technical Considerations

When conducting Job Scraping Using Python, adhering to ethical and responsible data collection practices is essential for maintaining compliance and ensuring trustworthiness.

Key considerations include:

- Respect robots.txt and site-specific policies to ensure that your scraping activities align with the platform’s usage guidelines.

- Throttle request frequency to prevent excessive server load, ensuring that scraping does not negatively impact the site’s performance.

- Limit data collection to only the information required for legitimate analysis, avoiding unnecessary storage of sensitive or unrelated details.

- Comply with applicable data protection laws and regulations to safeguard both your operations and the rights of individuals whose data may be processed.

In scenarios where official APIs are unavailable, creating a Google Jobs API Alternative can serve as a reliable method for structured data retrieval. However, such solutions must be designed with strong data privacy measures in place and strictly follow the target platform’s terms of service to maintain ethical integrity.

How ArcTechnolabs Can Help You?

We develop robust, scalable, and compliant solutions to Scrape Google Jobs Listings for recruitment teams, HR analysts, and workforce planners. Our tailored approach ensures you get clean, structured, and ready-to-use datasets for informed decision-making.

We can help you with:

- Custom scripts for targeted job board scraping.

- Seamless integration with HR analytics systems.

- Real-time monitoring for job market trends.

- Clean, structured datasets ready for analysis.

- Scalable solutions for ongoing recruitment intelligence.

By combining technical expertise with HR-focused strategies, we deliver tools that empower hiring teams to make faster, data-driven decisions. Our proven methods in Google For Jobs Data Extraction ensure your analytics workflows remain efficient and competitive.

Conclusion

Automating the process to Scrape Google Jobs Listings is a game-changer for HR teams and recruiters aiming to enhance hiring decisions. With the right tools, you can track trends, monitor competitors, and optimize your talent acquisition strategy more effectively.

If you’re looking to Extract Job Data From Google with precision and compliance, we can design a custom solution for you. Contact ArcTechnolabs today to discuss your requirements and start building more brilliant recruitment insights for your organization.